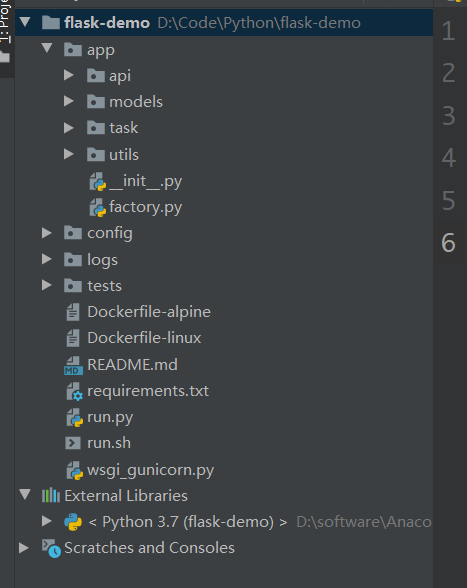

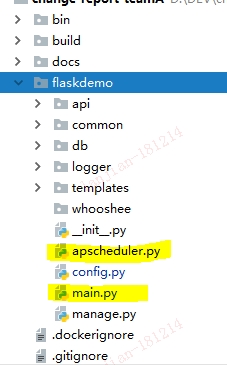

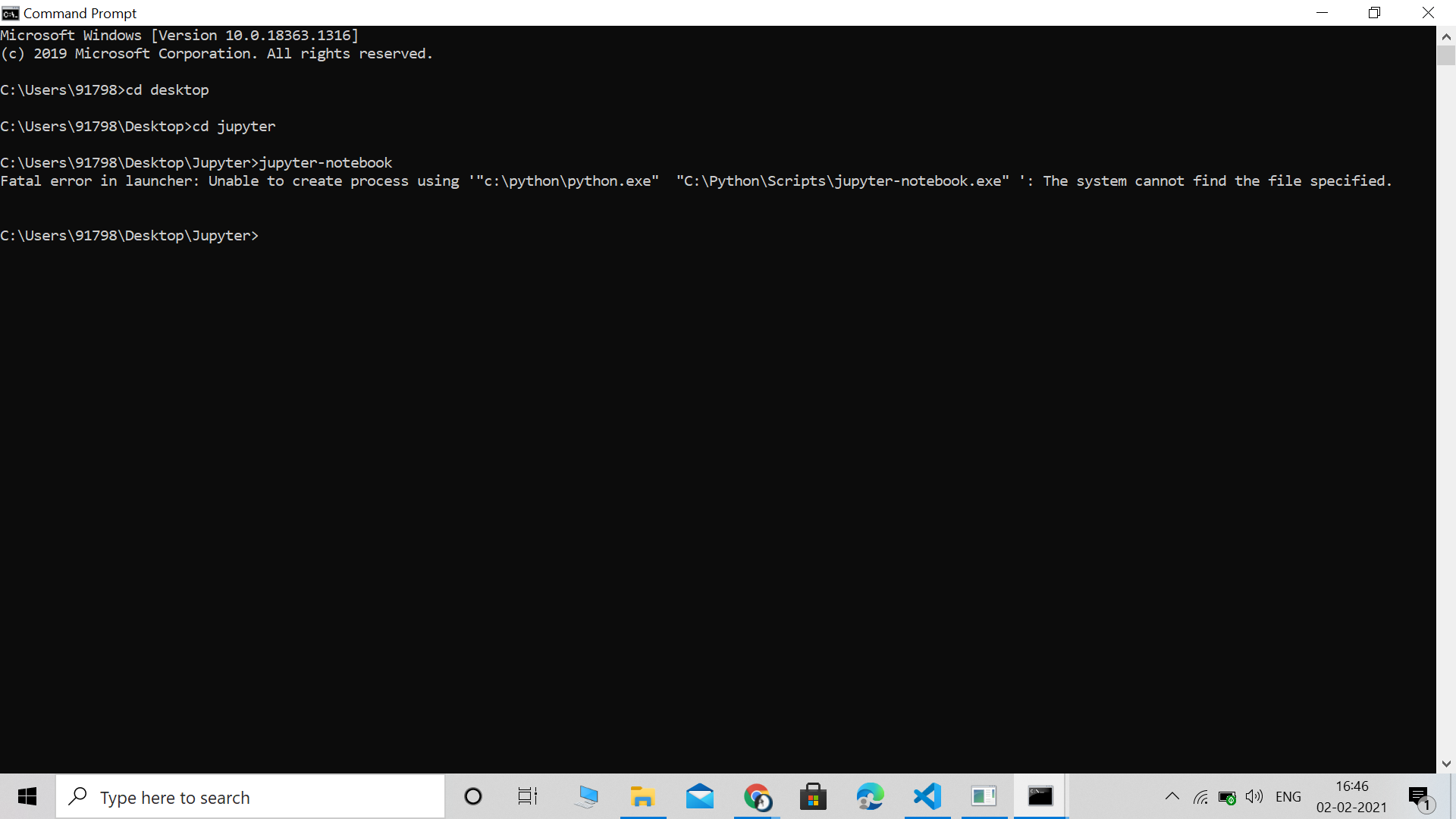

Uvicorn is pplication interface for asyncio frameworks The ASGI specification fills this gap, and means we're now able to start building a common set of tooling usable across all asyncio frameworksFrom flask import Flask from apschedulerschedulersbackground import BackgroundScheduler from config import AppConfig from applicationjobs import periodic_job def create_app() app = Flask(__name__) # This will get you a BackgroundScheduler with a MemoryJobStore named "default" and a ThreadPoolExecutor named "default" with a default maximum thread count of 10The datetime package from flask import Flask, request from twiliotwimlmessaging_response import MessagingResponse app = Flask web gunicorn appappand install gunicorn in your environment by running pip install gunicorn

Apscheduler Githubmemory

Apscheduler flask gunicorn

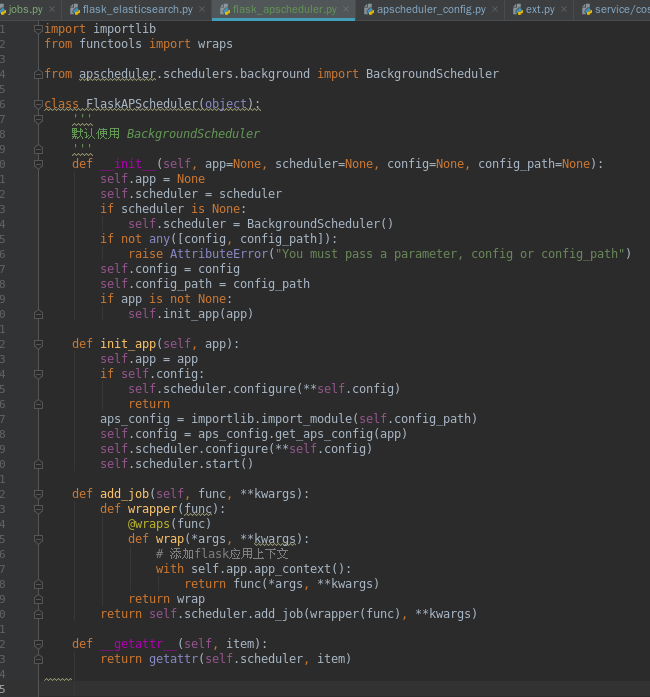

Apscheduler flask gunicorn- While starting the gunicorn I add job to apscheduler in gunicorn's on_starting () and it works fine Then I tried to stop/pause the job added above So I created a restful api name remove_job (job_id), i the restful api I invoked TaskManager's remove_job/pause_job functions, but nether them working In apscheuder's it said the job already I'm trying to use Apscheduler with a postgresql db via an asyncpg connection I thought it would working, because asyncpg supports sqlalchemy refBut yeah, it isn't working

1

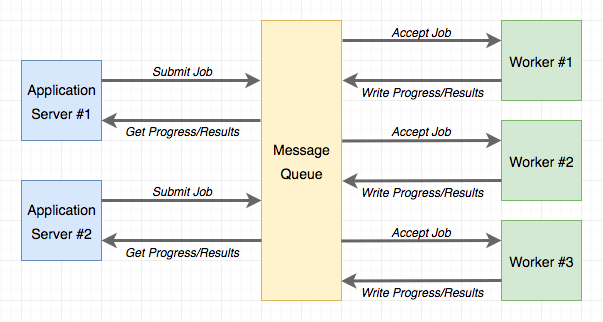

Need help running single APScheduler with multiple Gunicorn/Flask workers Rating is 5 out of 5 500 "Prince did a great job configuring a Python deployment He researched options, found the best solution, implemented and tested it2 days ago The APScheduler package (v310), to schedule the reminder messages;The solution 1 is to run APScheduler in a dedicated process You may choose to start a process running only a single BlockingScheduler instance The solution 2 is to run APScheduler in the Gunicorn master process, rather than worker processes The Gunicorn hook function on_starting() is called before the master process is initialized Whatever called inside is not forked into worker

Flask This question already has answers here Why does running the Flask dev server run itself twice?To use Gunicorn with these commands, specify it as a server in your configuration file servermain use = egggunicorn#main host = port = 8080 workers = 3 This approach is the quickest way to get started with Gunicorn, but there are some limitations Gunicorn will have no control over how the application is loaded, so settings suchFirst, you should use any WebSocket or polling mechanics to notify the frontend part about changes that happened I use FlaskSocketIO wrapper, and very happy with async messaging for my tiny apps Nest, you can do all logic which you need in a separate thread(s), and notify the frontend via SocketIO object (Flask holds continuous open connection with every frontend client)

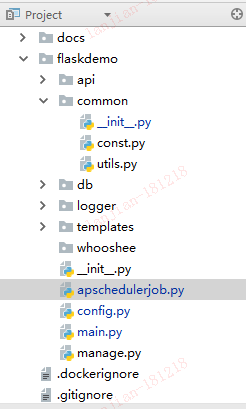

(7 answers) Closed 4 years agoI have problem when i am using apscheduler in my flask application In my viewpy file i am writing like this FROM python37 # Create a directory named flask RUN mkdir flask # Copy everything to flask folder COPY /flask/ # Make flask as working directory WORKDIR /flask # Install the Python libraries RUN pip3 install nocachedir r requirementstxt EXPOSE 5000 # Run the entrypoint script CMD "bash", "entrypointsh" The packages required for this There are five process created by uwsgi each running a flask app along with a apscheduler instance So, there are total 5 different scheduler instances When we send a request to endpoint, uwsgi passes request to one of the process

Aftab Hussain

Flask Apscheduler 定時任務持久化存盤后 如何重新從資料庫中獲取 有解無憂

How to use FlaskAPScheduler in your Python 3 Flask application to run multiple tasks in parallel, from a single HTTP request When you build an API endpoint that serves HTTP requests to work on longrunning tasks, consider using a scheduler Instead of holding up a HTTP client until a task is completed, you can return an identifier for the client to query the task status $ gunicorn helloapp timeout 10 See the Gunicorn Docs on Worker Timeouts for more information Max request recycling If your application suffers from memory leaks, you can configure Gunicorn to gracefully restart a worker after it has processed a given number of requests This can be a convenient way to help limit the effects of the memory leak Solution 1 Your additional threads must be initiated from the same app that is called by the WSGI server The example below creates a background thread that executes every 5 seconds and manipulates data structures that are also available to Flask routed functions Call it from Gunicorn with something like this

Gunicorn 部署flask Apscheduler 之踩坑记录 Jeffrey的博客

Apscheduler Topic Giters

項目 コマンドオプション default 説明 timeout t timeout 30 この時間に何の処理もしていない場合、ワーカーが再起動される。 0を指定すると無限大I have a flask server which facilitates both socketio and rest clients My problem is that whenever a client connects to my server the server starts to block all rest calls until the client disconnects This behaviour only occurs on my ec2 instance which runs ubuntu 1804 I'm using eventlet and gunicorn to run the server behind nginx Check the one with "flask" in the path and note down its PID(process ID) This should be the second column of the output I am Teresia Wangari, Currently studying Computer Science at the

Apscheduler Topic Giters

Flask Apscheduler 程序员宅基地

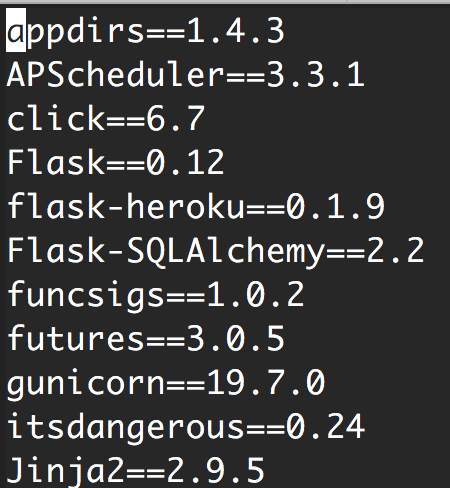

APScheduler==3 werkzeug== Flask==012 requests== lxml==372 PyExecJS==151 click==70 gunicorn==1990 pymongo redis;(7 answers) Closed 4 years agoI have problem when i am using apscheduler in my flask application In my viewpy file i am writing like thisAnswer 1 Your additional threads must be initiated from the same app that is called by the WSGI server The example below creates a background thread that executes every 5 seconds and manipulates data structures that are also available to Flask routed functions import threading import atexit from flask import Flask POOL_TIME = 5 #Seconds

1

Flask教程 十二 项目部署 迷途小书童的note迷途小书童的note

NGINX accelerates content and application delivery, improves security, facilitates availability and scalability for the busiest web sites on the InternetI'm busy writing a small game server to try out flask The game exposes an API via REST to users It's easy for users to perform actions and query data, however I'd like to service the "game world" outside the apprun() loop to update game entities, etc Given that Flask is so cleanly implemented, I'd like to see if there's a Flask way to do this在运行目录新建 env 环境变量文件,默认参数如下 注意:为了与其他环境变量区分,使用 SPIDER_ADMIN_PRO_ 作为变量前缀 如果使用 python3 m 运行,需要将变量加入到环境变量中,运行目录下新建文件 envbash 注意,此时等号后面不可以用空格 # flask 服务配置 export

Problem When Use Gunicorn Issue 13 Viniciuschiele Flask Apscheduler Github

通过ansible和flask Apscheduler实现自动化无人值守修改服务器账号密码 Lxl1531的博客 Csdn博客 Ansible Flask

import os import sys import time from importlib import import_module from apschedulerevents import EVENT_JOB_EXECUTED, EVENT_JOB_ERROR from apschedulerexecutorspool import ThreadPoolExecutor, ProcessPoolExecutor from apschedulerjobstoressqlalchemy import SQLAlchemyJobStore from apschedulerFlask This question already has answers here Why does running the Flask dev server run itself twice?Call it from Gunicorn with something like this gunicorn b logconfig logconf pid=apppid myfileapp In addition to using pure threads or the Celery queue (note that flaskcelery is no longer required), you could also have a look at flaskapscheduler

Flask Tutorial Twenty Flask Apscheduler Programmer Sought

Onkenegg Architecturediagram Is480

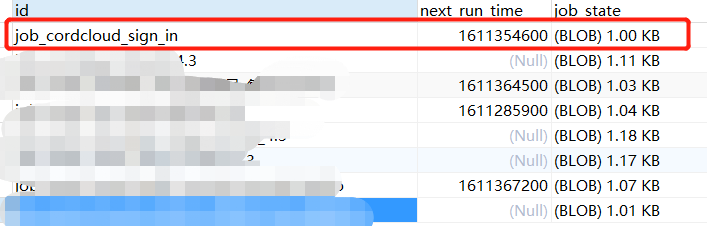

I use apscheduler (BackgroundScheduler SQLAlchemy jobstore) with flaskapp on gunicorn And follow this advices first and second I run gunicorn with flag preload and schedulerstart() runs only in masterprocess and jobs executes also thereinFlaskAPScheduler FlaskAPScheduler is a Flask extension which adds support for the APScheduler The following snippet of code will print â helloâ after waiting for 1 second, and then print â worldâ after waiting for another 2 seconds MySQL全部写死版本,好像把系统里比较新的库卸掉换成旧版了。 尝试重启面板 输入/etc/initd/bt 3,貌似Python依赖库有问题。 宝塔面板修复工具

Flask Gevent Nginx Gunicorn Supervisor部署flask应用 北漂的雷子 博客园

How To Use Threading Condition To Wait For Several Flask Apscheduler One Off Jobs To Complete Execution In Your Python 3 Application Techcoil Blog

Flask APScheduler Gunicorn workers Still running task twice after socket fix Ask Question Asked 2 years, 11 months ago Active 2 years, 11 months ago Viewed 2k times 5 3 I have a Flask app where i use FlaskAPScheduler to run a scheduled query on my database and send an email via a cron job I'm running my app via Gunicorn with theGunicorn provides many commandline options – see gunicornh For example, to run a Flask application with 4 worker processes ( w 4 ) binding to localhost port 4000 ( b ) $ gunicorn w 4 b myprojectapp Note this is a manual procedure to deploy Python Flask app with gunicorn, supervisord and nginxA full automated CI/CD method is described in another post Login to server and clone the source repository Generate SSH key pair Login to server and generate new ssh key pair for deployment

Discoverdaily A Flask Web Application Built With The Spotify Api And Deployed On Google Cloud Laptrinhx

How To Setup Python Flask Applications With Gunicorn And Nginx On Ubuntu Dev Community

Flask This question already has answers here Why does running the Flask dev server run itself twice? In multiple process, to avoid generate multiple scheduler instance and avoid execute task multiple times, i try to execute gunicorn like this gunicorn b manageapp k gevent w 8 preload Although only one scheduler was generated,but task don't work Then i try use lock to avoid generate mutiple shceduler instance , result is gunicorn部署Flask服务 作为一个Python选手,工作中需要的一些服务接口一般会用Flask来开发。 Flask非常容易上手,它自带的apprun(host="0000", port=7001)用来调试非常方便,但是用于生产环境无论是处理高并发还是鲁棒性都有所欠缺,一般会配合WGSI容器来进行生产环境的

Kill Apscheduler Add Job Based On Id Stack Overflow

Flask 启动了但是无法访问 使用flask Apscheduler控制定时任务 影子斜的博客 Csdn博客

Flask I want to make a FlaskNginxGunicorn deployment I have Nginx setup and running and I run gunicorn as described in the docs gunicorn appapp (7 answers) Closed 4 years agoI have problem when i am using apscheduler in my flask application In my viewpy file i(7 answers) Closed 4 years agoI have problem when i am using apscheduler in my flask application In my viewpy file i am writing like this 目前 Flask 官方列出了5个常用的 WSGI 容器,它们都实现了 WSGI ,我们使用最常用的 gunicorn 接下来就可以启动 gunicorn 服务了,我们使用 Flask教程 (十)表单处理FlaskWTF 中的示例,进入到源码目录,执行 gunicorn w 2 b 5000 runapp 命令中的 w 指的是处理请求的进程数

Display Machine State Using Python3 With Flask

Flask Apscheduler Javashuo

Data Models For this project, things are simple compared to most applications We only have a single table which is stored in the mystripeapp/modelspy file import sqlalchemy from flask import url_for from sqlalchemyextdeclarative import declared_attr from mystripeappbootstrap import app, db from flask_loginmixins import UserMixin from

Apscheduler Githubmemory

Ask Flask Background Thread With Flask Flask

Apscheduler和fastapi交互 一只路过的小码农cxy 程序员资料 程序员资料

6zwfns Bwod4um

Flask Implementation Timers Flask Apscheduler Programmer Sought

The Flask Mega Tutorial Part Xxii Background Jobs Miguelgrinberg Com

Question How To Run Apscheduler While Using Gunicorn By Sysmtemd Issue 135 Jcass77 Django Apscheduler Github

1

看到抖音上python 工程師曬的工資條 我沉默了 壹讀

Hauusu Giters

Display Machine State Using Python3 With Flask

Gunicorn Duplicating Scheduler Jobs Issue 13 Tiangolo Meinheld Gunicorn Flask Docker Github

How To Use Flask Apscheduler In Your Python 3 Flask Application To Run Multiple Tasks In Parallel From A Single Http Request Techcoil Blog

Are There Any Examples On How To Use This Within The Flask App Context Issue 34 Viniciuschiele Flask Apscheduler Github

Solve Gunicorn Flask Cannot Receive Chunked Data Programmer Sought

Gentelella Template Powered By Flask Laptrinhx

Flask打包部署python项目 Chuta9217的博客 程序员资料 程序员资料

Python3 Flask 开发web 九 Flask Apscheduler定时任务框架 测试媛 Csdn博客

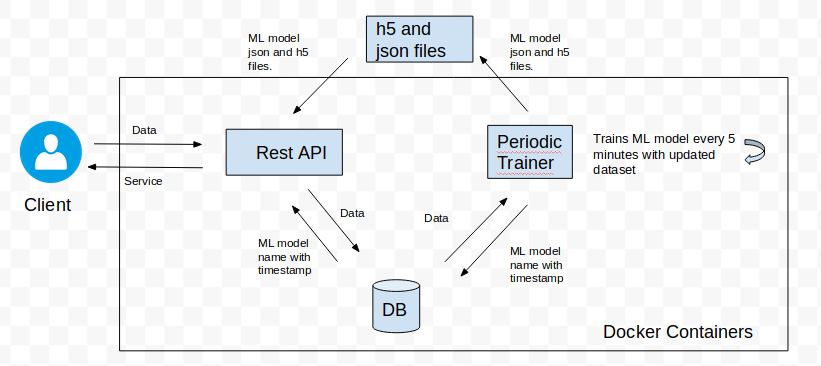

Deploying Keras Model In Production With Periodic Training By Omert Patron Labs Medium

Flask Web开发教程 二十 Flask Apscheduler 哔哩哔哩 つロ干杯 Bilibili

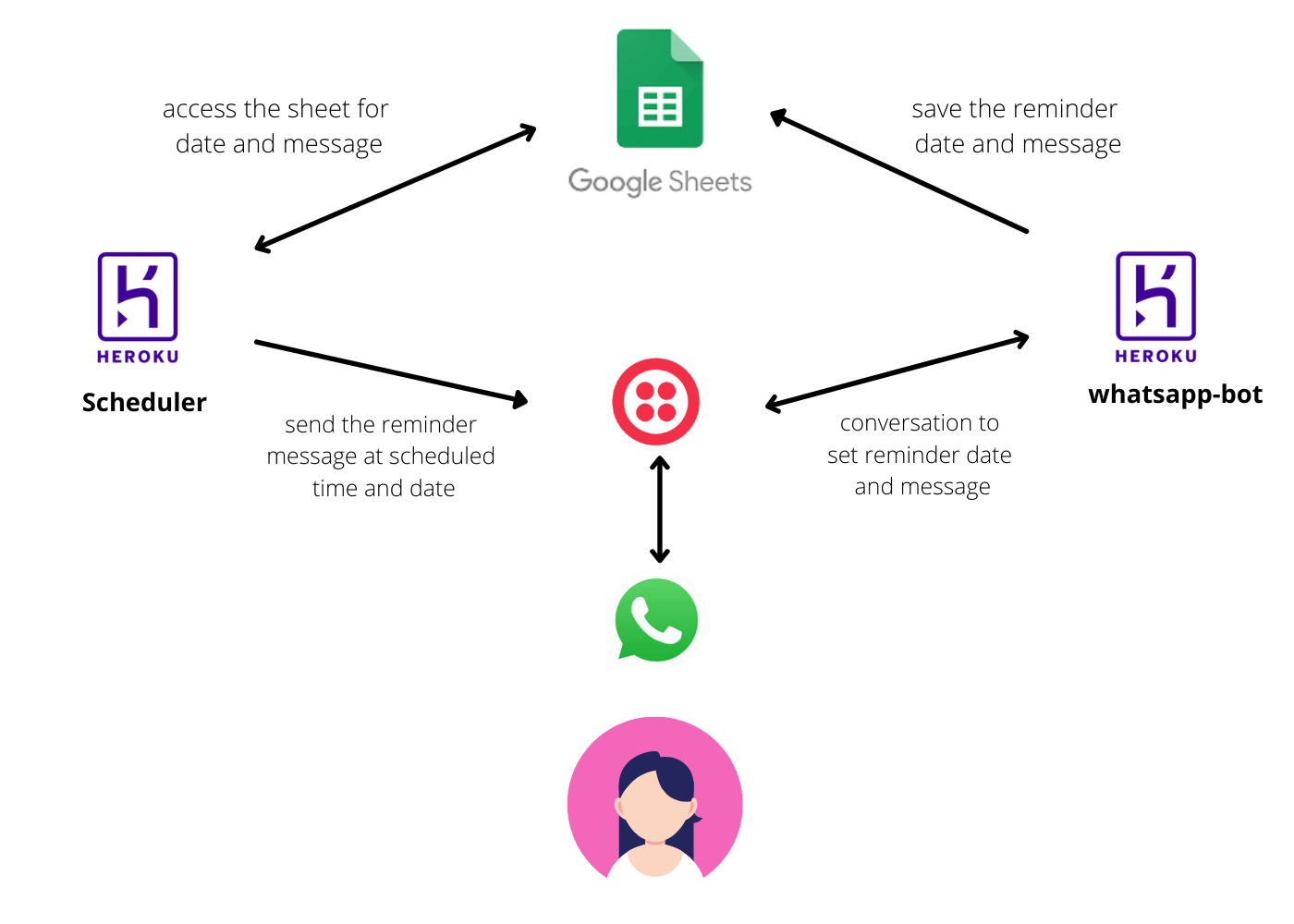

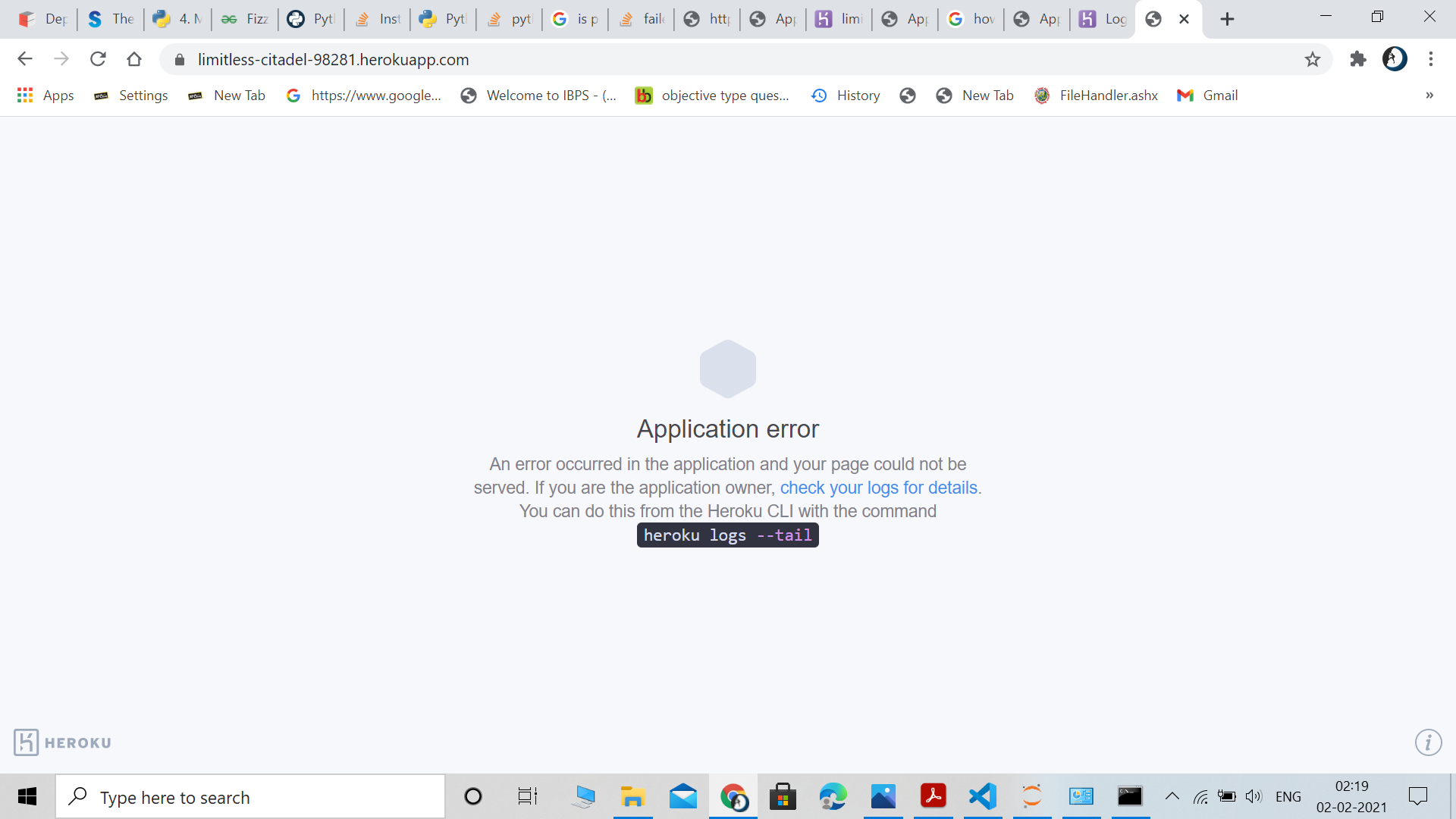

如何利用heroku 和flask 製作台北市u Bike Api 二 Heroku介紹 By Bryan Yang 亂點技能的跨界人生 Medium

Gunicorn 部署flask Apscheduler 之踩坑记录 链滴

Rf Case Select Checkbox Missing On Centos 7 Linux Githubmemory

1

Apscheduler Topic Giters

Ask Flask Background Thread With Flask Flask

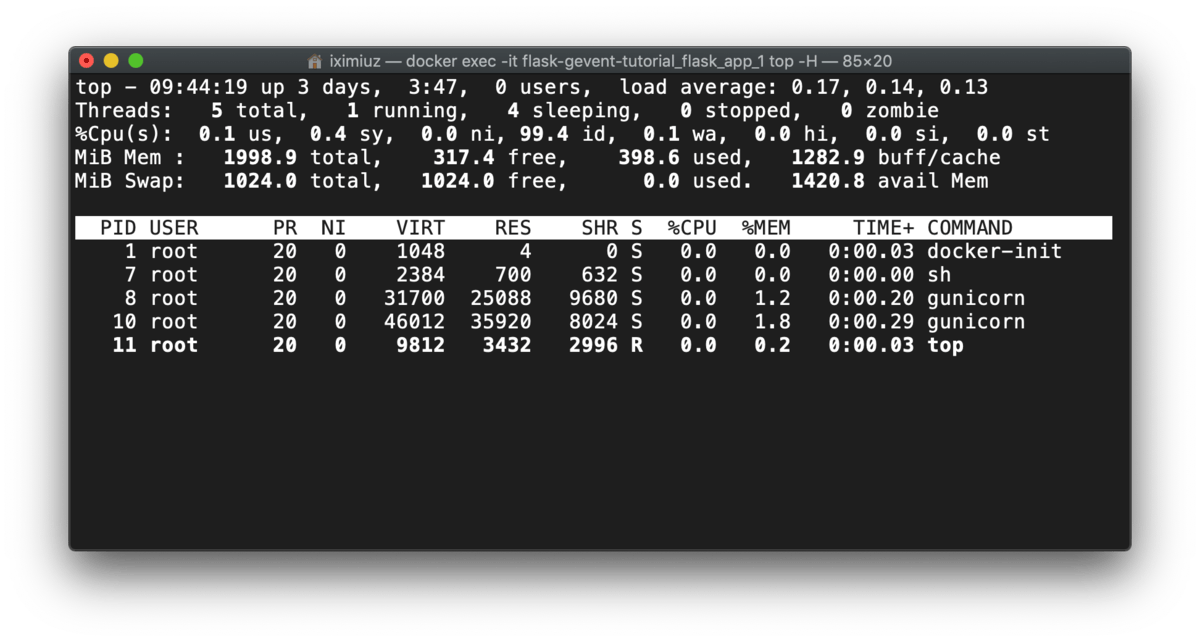

How To Use Flask With Gevent Uwsgi And Gunicorn Editions Ivan Velichko

Python Flask Web后端实践 知乎

Modulenotfounderror No Module Named Apscheduler Schedulers Apscheduler Is Not A Package Arnolan的博客 程序员资料 程序员资料

Apscheduler Githubmemory

Logging Gunicorn With Apscheduler In Flask Programmer Sought

Gunicorn Preload Task Don T Work Issue 87 Viniciuschiele Flask Apscheduler Github

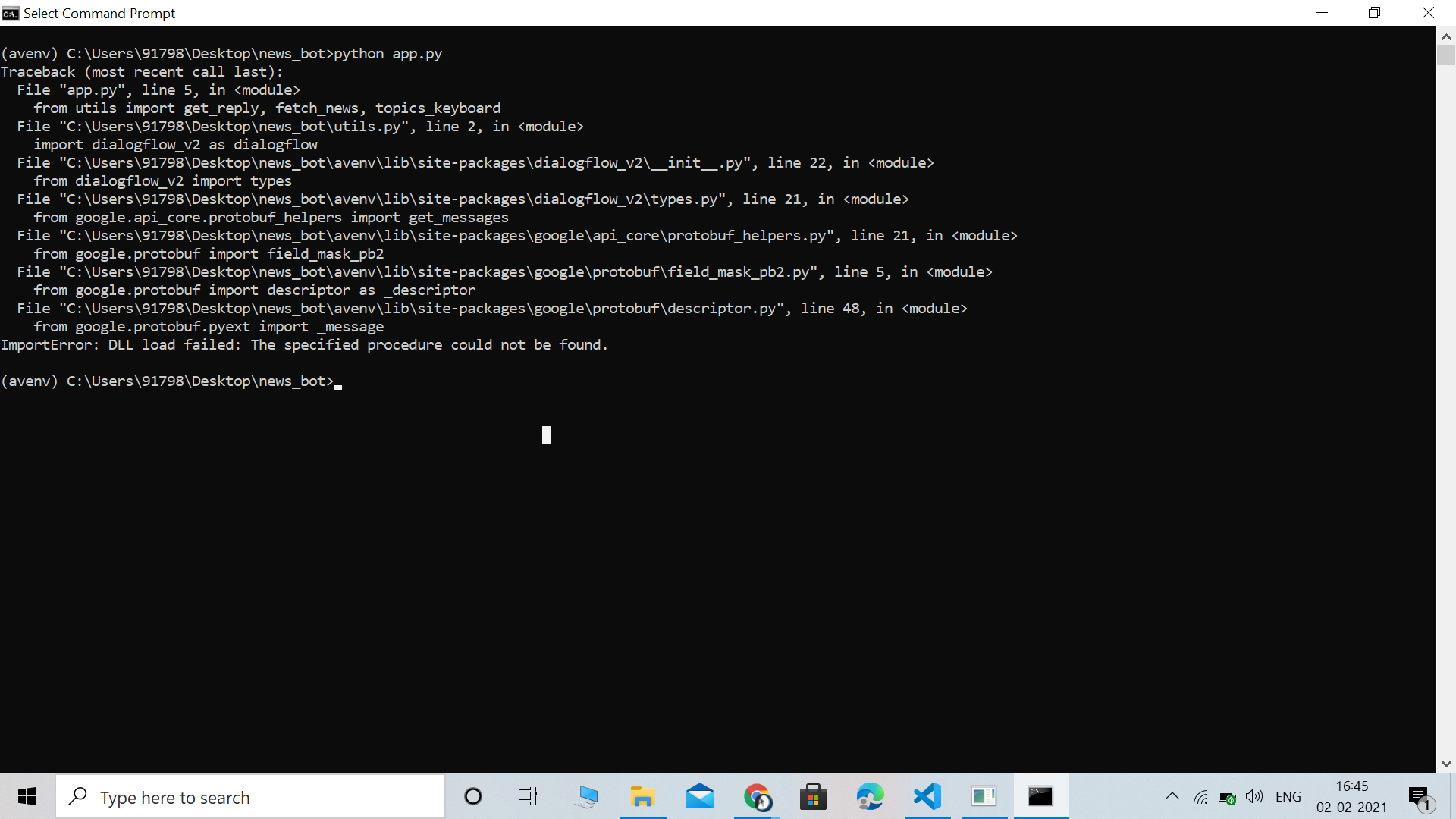

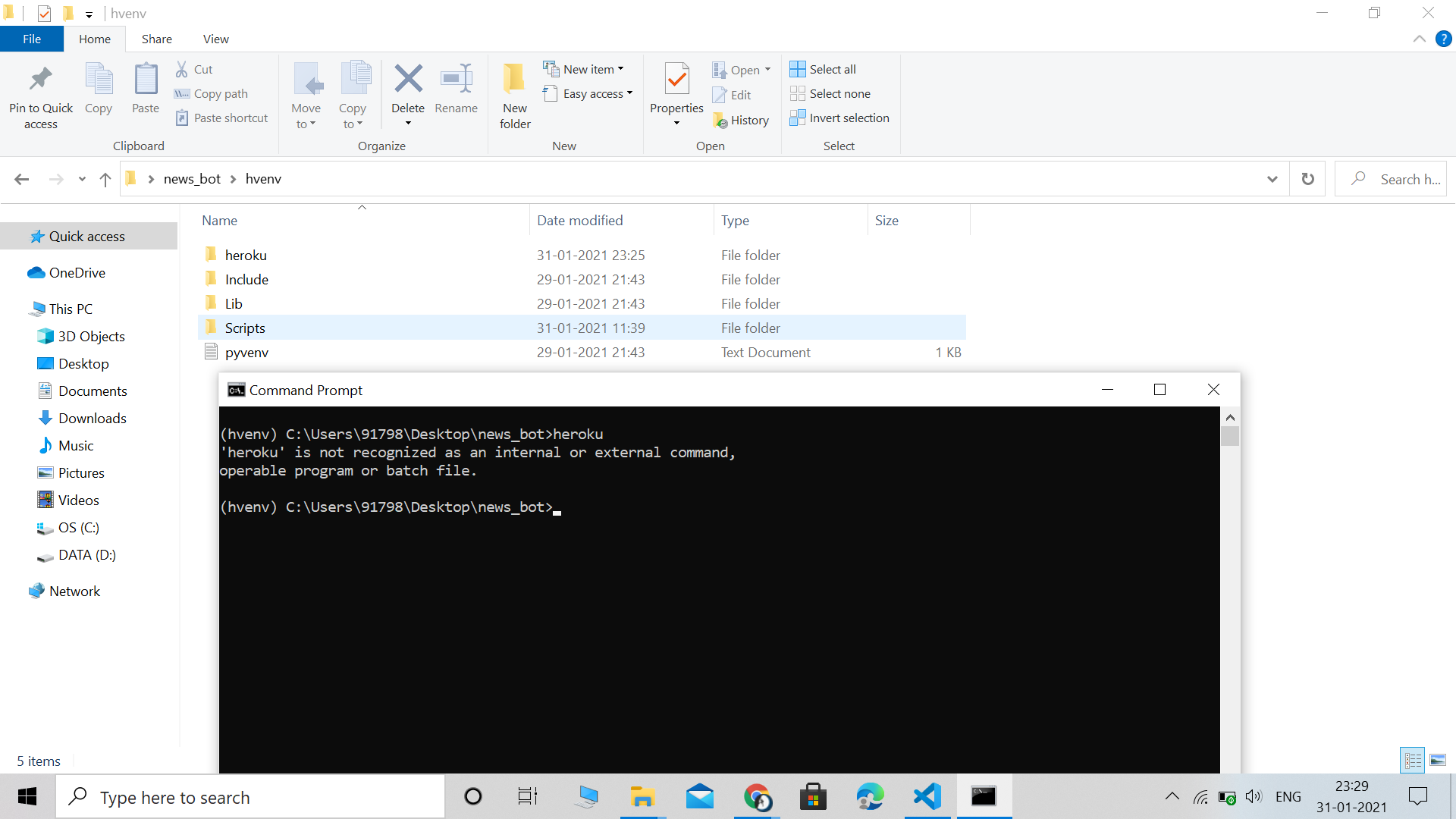

Deploying Flask App Deploying Flask App For Telegram Bot On Heroku Coding Blocks Discussion Forum

Docs Maestroserver Io

Tutorial De Flask Apscheduler Programador Clic

Gunicorn Preload Task Don T Work Issue 87 Viniciuschiele Flask Apscheduler Github

Gunicorn Flask Apscheduler

Apscheduler Topic Giters

Linux下使用apscheduler 定时执行scrapy爬虫失败 本地windows执行成功 Segmentfault 思否

Apscheduler Githubmemory

Problem When Use Gunicorn Issue 13 Viniciuschiele Flask Apscheduler Github

Openeuler Repo Please Follow The Document To Setup Openeuler Repo Also We Has Mirror Sites Globally Go Visiting Our Official Mirror List And Select The Suitable Site Don T Be Hesitate To Contact Us If You Have Any Question Openeuler Repo Index Of Openeuler

Deploying Flask App Deploying Flask App For Telegram Bot On Heroku Coding Blocks Discussion Forum

Add Job From Gunicorn Worker When Scheduler Runs In Master Process Issue 218 Agronholm Apscheduler Github

Gunicorn Typeerror Call Takes From 1 To 2 Positional Arguments But 3 Were Given With Flask Application Factory Stack Overflow

Deploying Flask App Deploying Flask App For Telegram Bot On Heroku Coding Blocks Discussion Forum

Flask 中使用apscheduler 应用上下文问题 V2ex

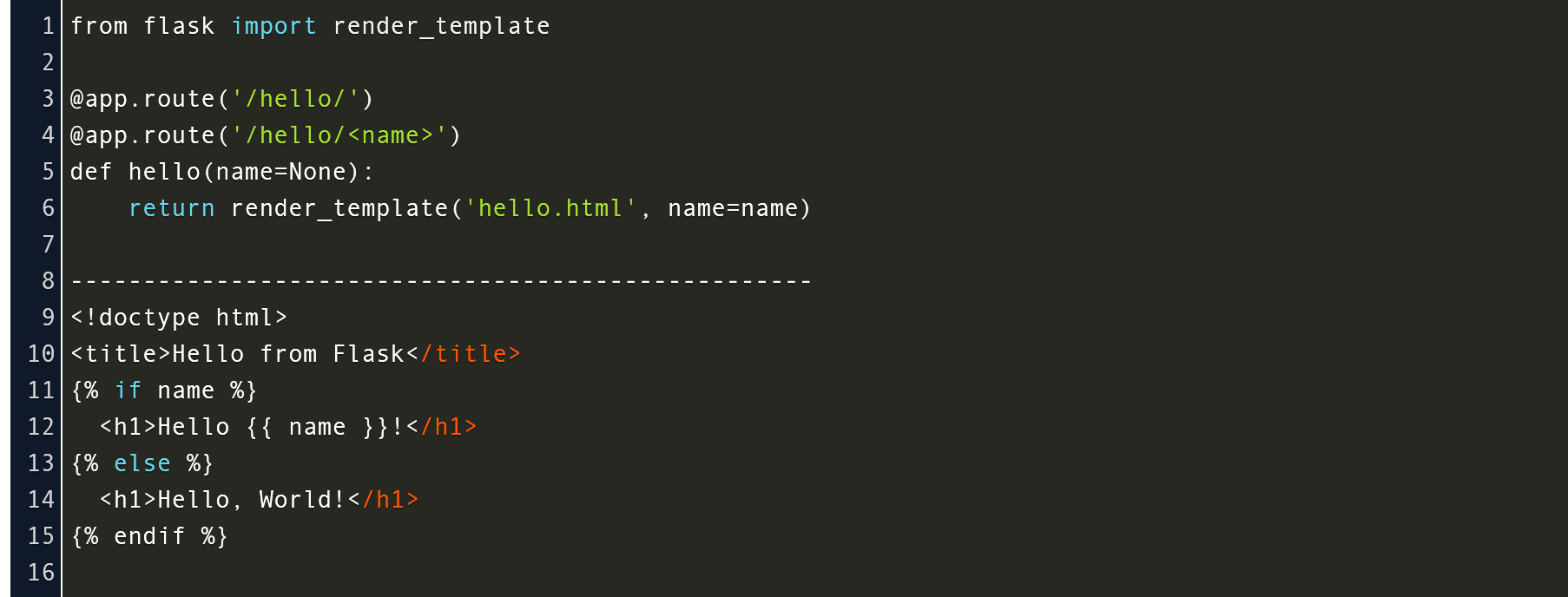

Flask Set Default Route Code Example

How To Run A Scheduled Task To Run A Flask App Constantly R Flask

Disable Reloader Of Gunicorn Flask Application Stack Overflow

Steroids

Deploying Flask App Deploying Flask App For Telegram Bot On Heroku Coding Blocks Discussion Forum

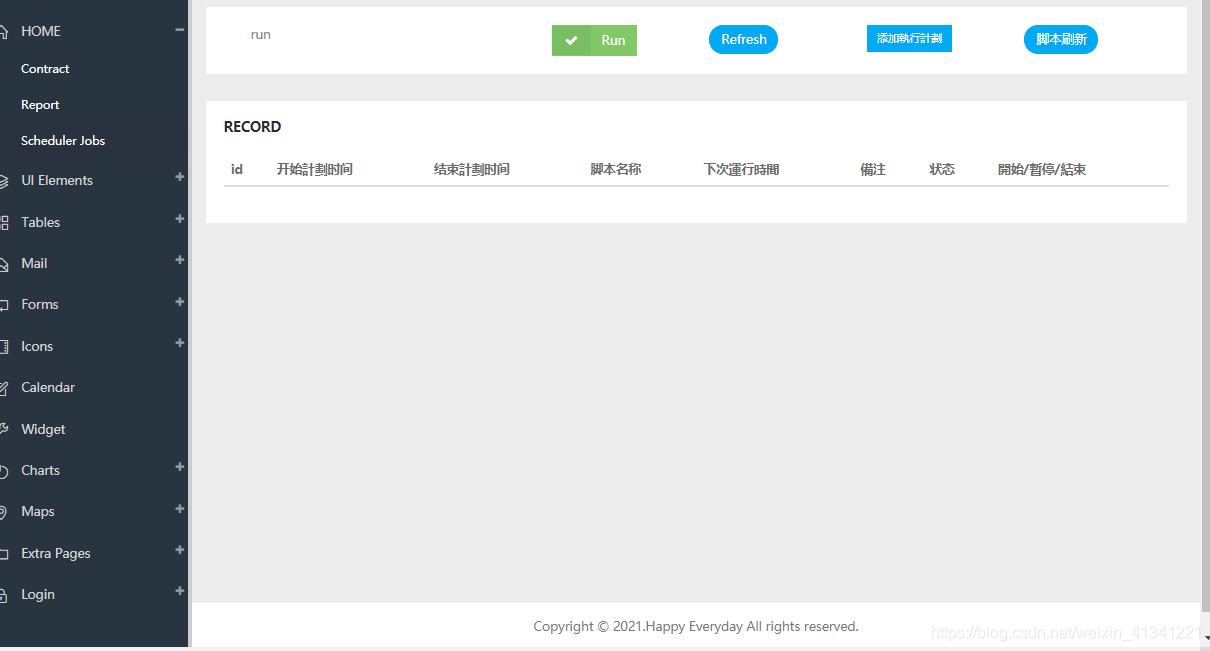

基于flask Flask Apscheduler定时框架建立的定时web定时运行py脚本实例 Alfie的博客 程序员宝宝 程序员宝宝

How To Create An Interval Task That Runs Periodically Within Your Python 3 Flask Application With Flask Apscheduler Techcoil Blog

Flask项目gunicorn Nginx 进行布署阿里云静态文件出不来解决方案 Python的神奇之旅 程序员宅基地 程序员宅基地

Template For Deploying Ml Models Using Flask Gunicorn Nginx Inside Docker

How To Solve No Api Definition Provided Error For Flask Restplus App On Cloud Foundry Techcoil Blog

Apscheduler Python轻量定时任务框架 Python案例 何三笔记

Gunicorn 部署flask Apscheduler 之踩坑记录 Jeffrey的博客

Flask Apscheduler定时任务查询操作数据库 多文件 模块 Arnolan的博客 程序员宝宝 Flask Apscheduler 程序员宝宝

The Flask Mega Tutorial Part Xxii Background Jobs Miguelgrinberg Com

Apscheduler Topic Giters

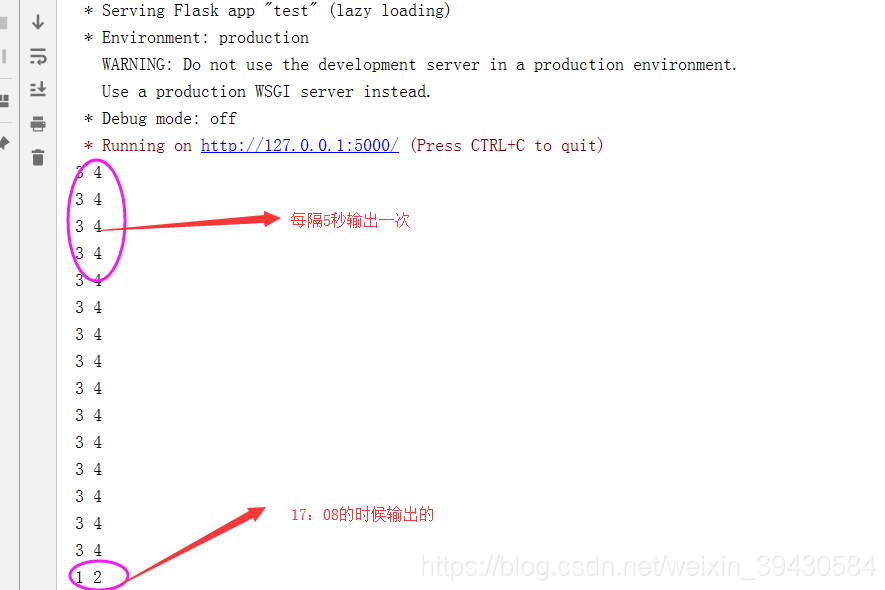

Flask Gunicorn和flask启动apscheduler不执行的问题和重复执行的bug 10相濡以沫 Csdn博客

Flask Gunicorn和flask启动apscheduler不执行的问题和重复执行的bug 10相濡以沫 Csdn博客

A Demo Of A Minimal Nginx Uwsgi Flask Redis Stack Using Docker In Less Than 42 Mb Last Update Oct 15 21

Gunicorn 部署flask Apscheduler 之踩坑记录 Jeffrey的博客

Apscheduler Topic Giters

1

How To Create An Interval Task That Runs Periodically Within Your Python 3 Flask Application With Flask Apscheduler Techcoil Blog

Monitor Your Flask Web Application Automatically With Flask Monitoring Dashboard By Johan Settlin Flask Monitoringdashboard Turtorial Medium

Gunicorn 部署flask Apscheduler 之踩坑记录 Jeffrey的博客

Flask Gevent Nginx Gunicorn Supervisor部署flask应用 北漂的雷子 博客园

Flask Apscheduler Bountysource

Display Machine State Using Python3 With Flask

基于flask的任务管理系统 知识点 L是晴子的球迷的博客 程序员宝宝 程序员宝宝

Flask Dashboard Staradmin Design Free Version Appseed

Steroids

Apscheduler Topic Giters

Antoine Fourmy Flask Gentelella Gitlab

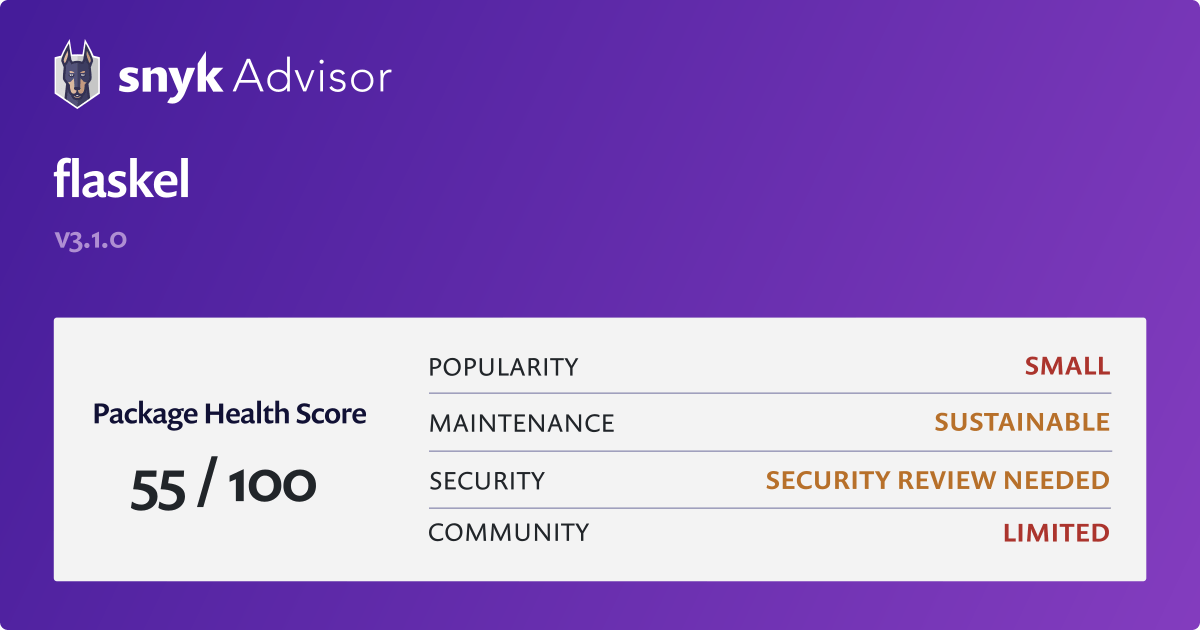

Flaskel Python Package Health Analysis Snyk

How To Use Threading Condition To Wait For Several Flask Apscheduler One Off Jobs To Complete Execution In Your Python 3 Application Techcoil Blog

Flask Apscheduler Bountysource

Solved Django Make Sure Only One Worker Launches The Apscheduler Event In A Pyramid Web App Running Multiple Workers Code Redirect

Flask Apscheduler Javashuo

0 件のコメント:

コメントを投稿